Embedded Control and Machine Learning technology has advanced dramatically in the last decade. Creating state-of-the-art applications in a modular and efficient scope has never been more achievable. That allows us to ask questions that have not been asked before. And that’s exactly what we focus on at Quanser: asking relevant academic questions and then arming researchers with tools to answer them.

Cyber-physical Systems for Modern Applications

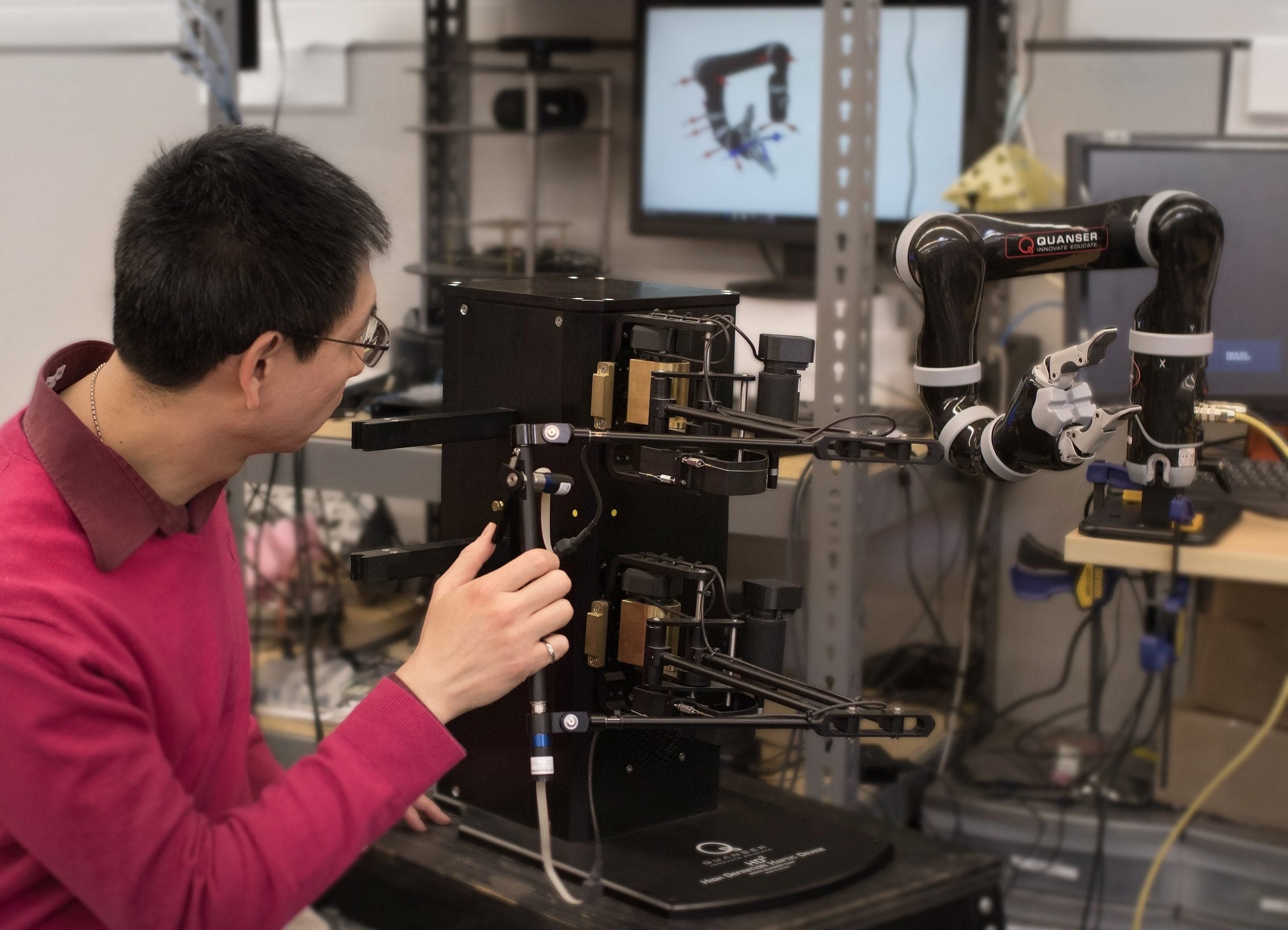

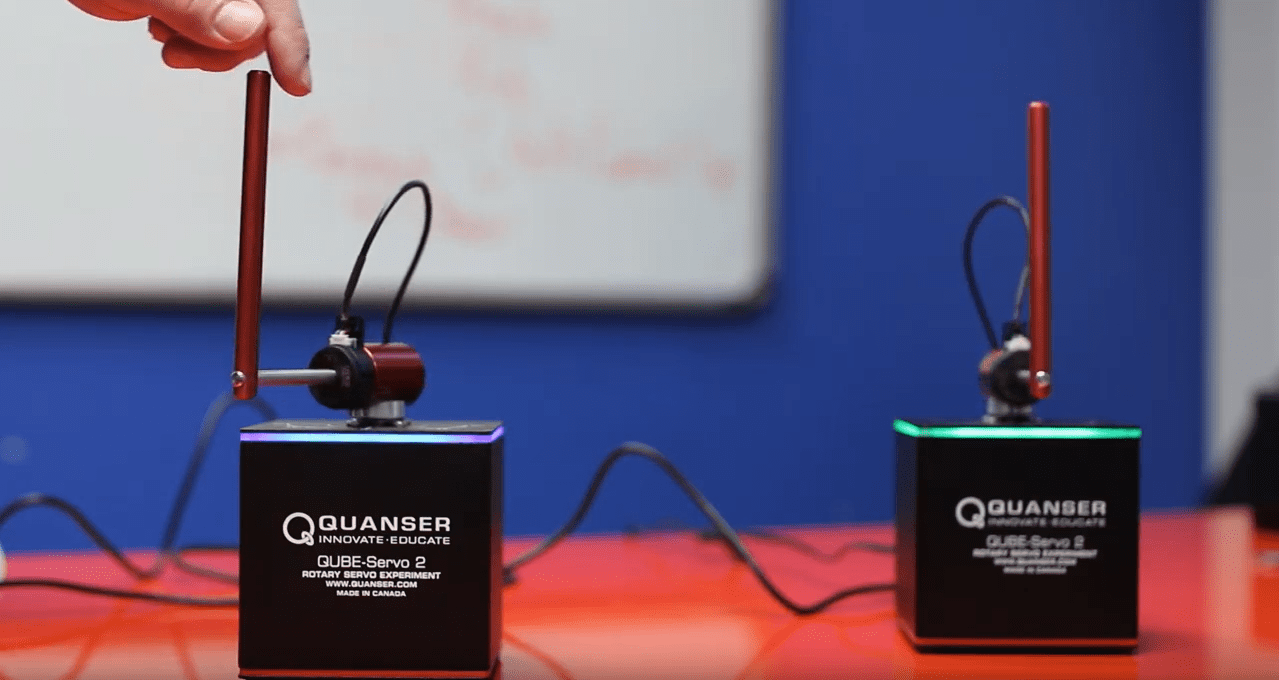

Working in the realm of academically appropriate abstraction, we define a ‘cyber-physical’ system as a key component when it comes to designing applications in an intelligent and connected world. Consider one of our most fundamental devices: QUBE-Servo 2.

Do, Sense, Think, and Talk

This device, by itself, is essentially a dumb mechanical system. It has a single degree of actuation through a base motor. A single degree of sensing allows you to measure the base rotary angle. An additional degree of sensing can be used to measure the inverted pendulum angle as well. That gives us the ability to do (actuate) and see (sense or measure) something. QUBE-Servo 2 connects to a computer via a USB connection. The controller can also be deployed to an embedded processor, such as a Raspberry Pi 3B+. That allowed us to create an IoT application running on the embedded target.

In this example extension, we now have the added ability to think (running the control algorithms and any logic). Also note that the devices can now talk (TCP/IP communication between the two QUBE-Servo 2 nodes). In this regard, each QUBE-Servo 2 becomes a ‘cyber-physical’ device capable of doing, sensing, thinking, and conversing. The actual logic in the IoT demo above is irrelevant to the overall cyber-physical theme, but you can find more information on it here. Also check out the Quanser Aero IoT Demo below.

Machine Learning and Reinforcement Learning with QUBE-Servo 2

Researchers have been using QUBE-Servo 2 as a cyber-physical system to develop Machine Learning and Reinforcement Learning applications for a while. Here are some of the latest examples, all published this year.

Researchers at Korea University of Technology and Education (KOREATECH) and Electronics and Telecommunications Research Institute (ETRI) developed a Deep Q-Network-based control algorithm to control the unstable and highly nonlinear QUBE-Servo 2 system, with monitoring and learning on a remotely located learning agent (EdgeX Platform).

At Korea Advanced Institute of Science and Technology (KAIST), researchers compared our out-of-the-box energy-based swing-up controller to a sophisticated and enhanced version that used a type of Bayesian optimization called Entropy Search to improve swing-up performance for various initial conditions.

In other work, a team at the Max Planck Institute for Intelligent Systems worked on Meta-Learning Acquisition Functions for Bayesian Optimization. The team at Uppsala University worked on Learning Convex Bounds for Linear Quadratic Control Policy Synthesis. In collaboration with KTH Royal Institute of Technology, they also analyzed Robust Exploration in Linear Quadratic Reinforcement Learning.

Possibilities are Endless

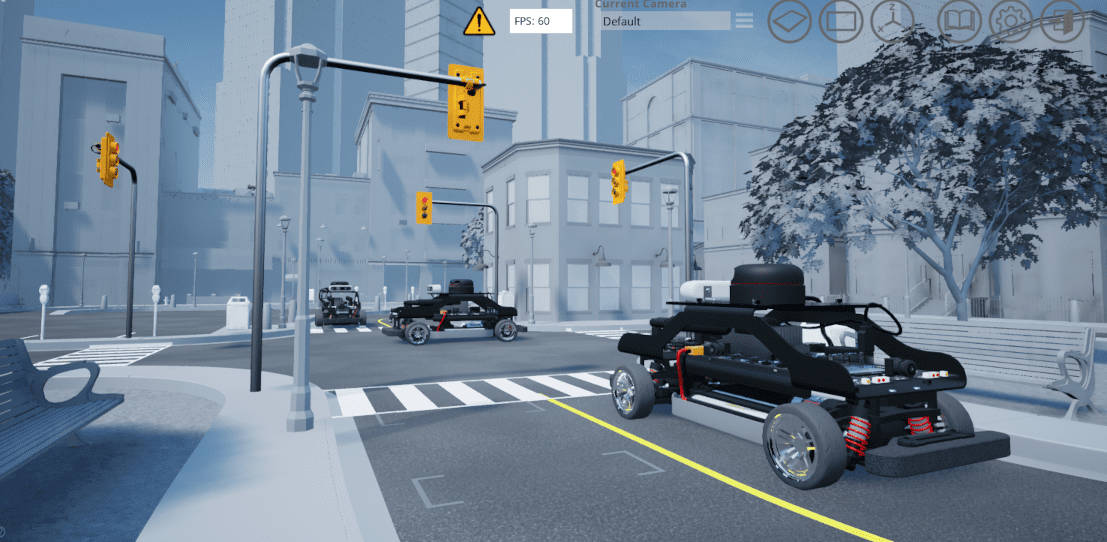

All that was done with a fundamental control platform such as the QUBE-Servo 2. Consider an aerospace application using Quanser AERO or QDrone, or even our upcoming QCar self-driving platform (you can find more information on it here).

We are excited to see what work is being done with our systems, and further more, to think about the possibilities and opportunities this opens up. Comment below and let’s talk more!

See what I did there?