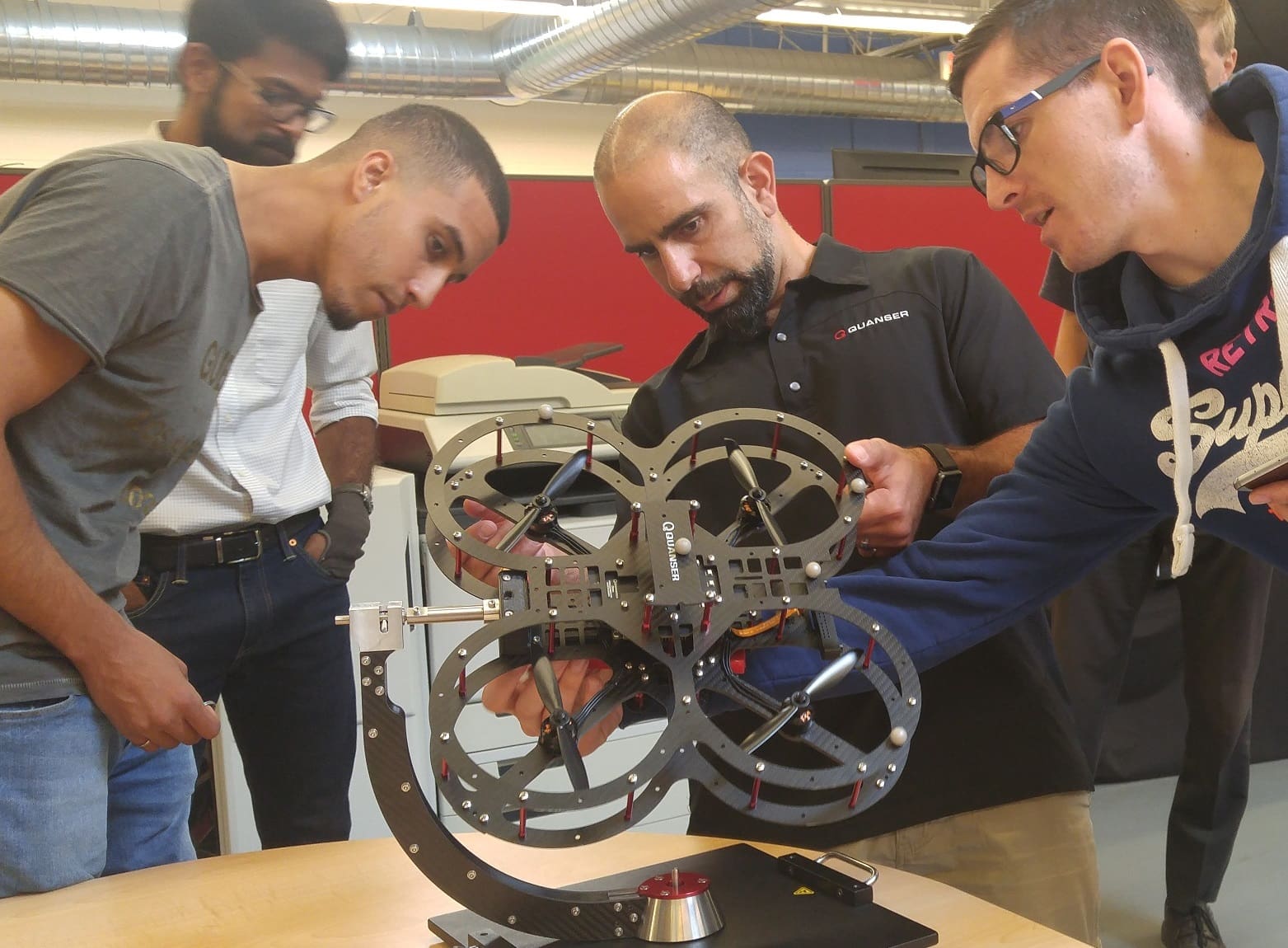

My first blog post on the QCar and the Self-Driving Car Research Studio looked at the hardware. My second one talked about the software side. This month, we’re going to focus on the studio contents and the applications we have been developing over the last couple of months.

The Complete Solution

Quanser has always been about providing more than just the hardware or software, and our second research studio is no exception. This is a complete, research-grade solution. The studio includes two or more QCars, a high-end PC with all the supporting development tools and API’s pre-installed and configured, network infrastructure, 700 square feet (65 square meters) of custom vinyl road panels, and other supporting accessories. Within hours of unpacking the pallet of hardware, you can be up and running a fully autonomous vehicle. Other devices on the market have lane-following and obstacle detection as the goal. For the Self-Driving Car Research Studio, that is just the starting point!

On the Road to Success

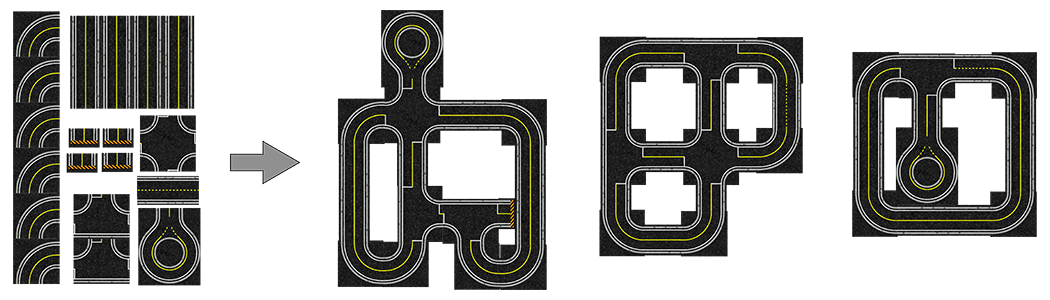

Probably the most visible and challenging aspect of the studio solution is the roads. We had to consider the surface texture, re-usability, ease-of-use, and reflectivity. We tried stickers, canvas, cardboard, foam tiles, and even wallpaper! The solution we ultimately landed on was a custom reinforced vinyl. We tested the vinyl panels in our office for a couple of months, walking over them in our winter boots, and the road still looks good – better than our regional highway!

The vinyl panels can be easily rearranged and cut. You can use them to make a road configuration that fits your space, and easily make changes to test different scenarios. Roll out a path down a hallway for an afternoon, or make a permanent installation in a lab space. If you have a really large space, you can order additional sets to build out your scale model world. We can’t wait to see what you make!

A Modular Application Approach

Over the last year, our Applications Team at Quanser has been developing a new approach for delivering examples to our research customers. Rather than just one or two monolithic pieces of code that demonstrate a complex example, we have broken everything down into components, tasks, and applications.

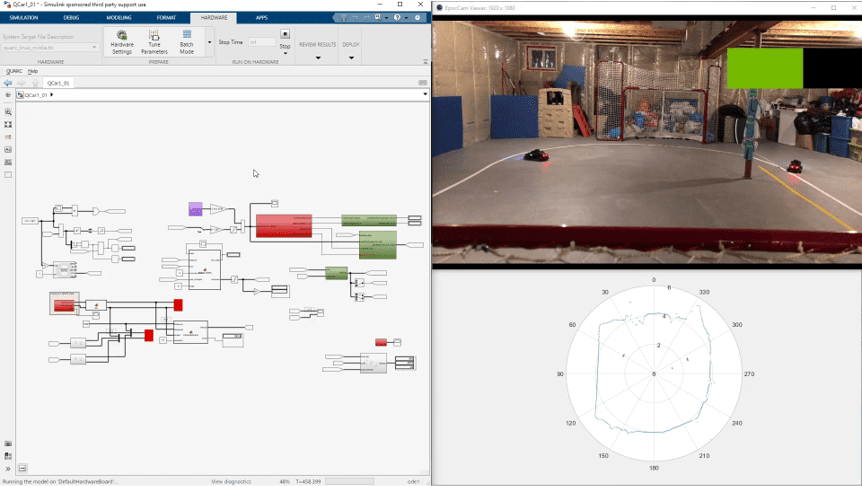

Components are the fundamental elements such as API calls in C or Python, or QUARC blocks in Simulink. These are used to make tasks, which are reusable building blocks providing higher-level functions. This could be an Essential Unit that uses API calls to read the encoders and IMU to give you speed and direction, or an Interpretation Unit that takes in a video stream, detects lines, and provides a heading to stay in the lane. Tasks are combined to make applications such as following a lane and braking at an intersection when a stop sign is identified within a certain distance from the car.

The goal of this modular approach is to allow researchers to pick and choose tasks from the provided examples without the need to understand how each task works in great detail. It also means that if you want to implement your own lane following algorithm, you can easily remove a single task and replace it with your own.

This approach has an additional advantage: it facilitates mixing languages. Want your speed controller in Simulink while running a neural net in Python? Just replace the analytic image processing in Python with our cross-language communications link and that one task can be run in Python. Want to do something with custom hardware? Go back to one of our basic interfacing examples as a starting point. Once you have the details worked out, you can integrate it into one of the more complex examples to accelerate your research to a further starting point.

At this point, we already have dozens of QCar-specific application examples spanning Python, ROS, C, and Matlab/Simulink, and many more general examples using our API’s:

|

|

Over the coming months, we will continue building this library of reusable code and adding third party support. We will regularly update the examples and even give customers access to our “experimental folder,” so you can use our latest developments to keep your work on the cutting-edge.

Mixed Reality and the Unreal Engine

Something I’ve teased at before is mixing the physical and virtual worlds. We are officially adding support for the Unreal Engine for simulations, visualizations, augmented reality, and mixed reality. Using the Unreal Engine has been a passion of mine for years now, and I am constantly amazed at the visual quality you can achieve with very little effort.

We are going to supply a fully rigged, animated, and textured model of the QCar, the same “floor panels” so you can recreate identical setups in both the virtual and physical worlds, as well as working examples to integrate with your hardware.

The best part is that you don’t need to write a single line of C++! The models and examples work with Epic’s “blueprint” system, a high-level graphical language. If you start with an example we provide, you might not even need to touch that. Of course, if you want to delve into the depths of the engine, Epic provides full source code for their engine. Need models of buildings? Or animals from the Middle East? Or a set of road signs for Japan? There are thousands of assets for sale available on Unreal Engine’s Marketplace, as well as hundreds of free models and textures. You can also import models from Blender, 3ds Max, Maya, Solidworks, Sketchup Pro, and many other packages.

So what can you do? You can create virtual parking lots, or outdoor environments. One of the more exciting applications is localizing the car, streaming camera data to the Unreal Engine, then overlaying virtual elements on the video stream and returning it for processing to create virtual towns in an otherwise empty physical room:

We can also simulate the depth cameras and LIDAR so these too can be merged with your real-world sensors. You can add small details like working traffic lights, or more advanced situations like pedestrians crossing the road. By adding small virtual elements to your otherwise physical camera images, you can validate your algorithms with the real-world issues of sensor noise and lighting variations. This also lets you test the muddy ethical waters of self-driving dilemmas without risking the safety of actual people, as well as testing and consistently reproducing the one-in-a-million scenarios that would be difficult or impossible to devise with purely physical hardware.

Out of the Box

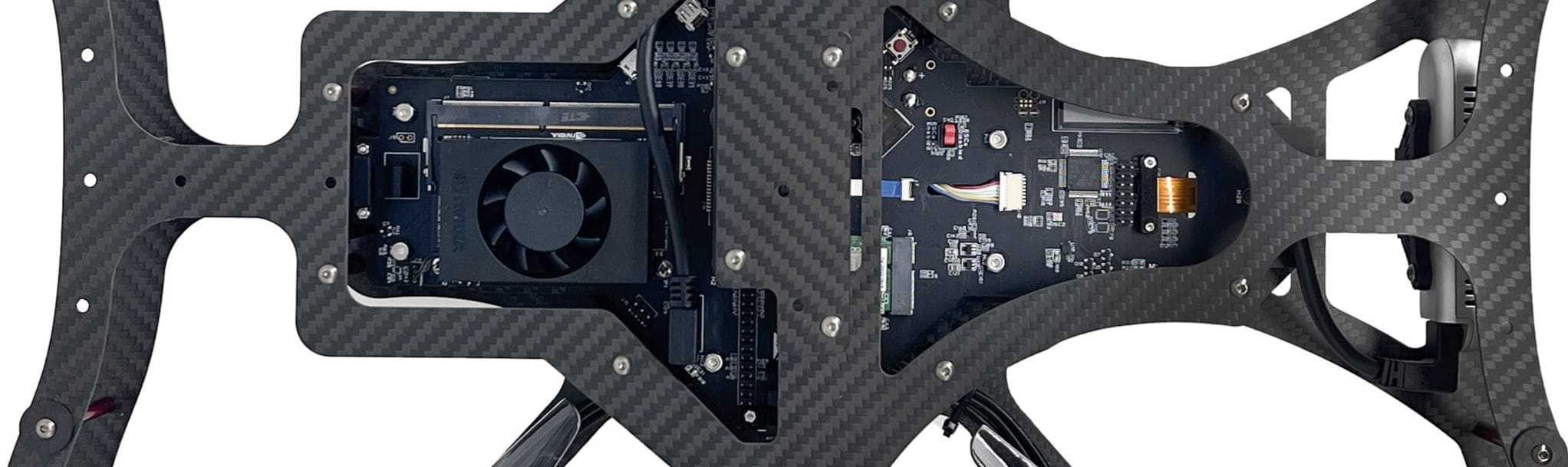

At the heart of every studio is the PC. And not just any PC, but a PC to make AI researchers and graphics developers alike jealous of your hardware. We have spent years making QUARC as installation-friendly as possible… as scientific computing software goes… but installation help is still our number one tech support request. Part of the difficulty here is that QUARC installation depends on other supporting software. The Self-Driving Car Research Studio depends on dozens of software packages to support ROS, Python, Matlab/Simulink, the Unreal Engine, and the many other AI tools. We’ve spent months sorting out all the intricacies between the software components, so you don’t need to. And with all the simulations and mixed reality examples, we also know exactly what the computer is capable of so we can continue to deliver top-quality examples that fully leverage your research investment.

Driving Forward

As the project manager on the QCar and the Self-Driving Car Research Studio, I spend most of my time making sure everyone is on the same page, and we’re meeting our deadlines. Outside of my contribution to the augmented reality prototype example in the video above, I haven’t had much time to develop content (aka play) with the QCar myself, but now I get a couple of months to do just that, and I’m very excited! A lot of us at Quanser love to geek out with our own products, and the QCar is no exception. At our internal release, after showing the QCar and the studio to the rest of Quanser, and while everyone went to eat the QCar cake, a few of the engineers from the audience started playing with the QCar. In just 30 minutes, they whipped up an adaptive cruise controller. Sure, they were all late for the afternoon meeting, but we let them have some fun!

As the project manager on the QCar and the Self-Driving Car Research Studio, I spend most of my time making sure everyone is on the same page, and we’re meeting our deadlines. Outside of my contribution to the augmented reality prototype example in the video above, I haven’t had much time to develop content (aka play) with the QCar myself, but now I get a couple of months to do just that, and I’m very excited! A lot of us at Quanser love to geek out with our own products, and the QCar is no exception. At our internal release, after showing the QCar and the studio to the rest of Quanser, and while everyone went to eat the QCar cake, a few of the engineers from the audience started playing with the QCar. In just 30 minutes, they whipped up an adaptive cruise controller. Sure, they were all late for the afternoon meeting, but we let them have some fun!