Context

It’s a pleasure to work for a company where we spend time discussing why we do what we do. We believe the world needs more multidisciplinary engineers, especially the authentic, hands-on and fearless kind. How are we going to do it? By working closely with academics around the world to understand their education and research needs, and solve associated problems. This culminates in what we do, that is the creation of engineering technology for academics to meet the changing requirements on the frontiers of engineering innovation.

One such “what” involves solutions for undergraduate and graduate mobile robotics. On that frontier, Quanser has offered a range of solutions to customers around the world for over 20 years. The original QBot was built upon the iRobot Create, with a custom embedded target and expanded sensing suite. We experimented with other versions before ultimately collaborating with an academic at the University of Toronto to design our next offering, QBot 2. The Kobuki mobile base along with the Kinect depth camera and a Gumstix DuoVero target took us forward quite a few years. As the sensor suite and computing targets evolved from the Gumstix to Raspberry Pi 3 and to the Raspberry Pi 4, we watched with curiosity and pride as our savvy friends, educators and researchers embraced these in their courses, used them to validate research algorithms and taken them beyond. They added manipulators, lidars, storage bins, IR sensors, etc. and used them in various multiagent applications.

As the world around us faced supply chain issues, we received an opportunity to pause, think over and redesign from the ground up using our intimate academic experience and knowledge of how these systems improve the lives of our partners, students, educators and researchers. This was coupled with a growing need for Autonomous Mobile Robots (AMRs) due to trends in industrial automation, warehousing, autonomous delivery etc. We were now in a state to synthesize key design features our friends were interested in, aerial vehicle landing on a moving ground robot, manipulator addons, higher payload etc. in addition to our ability to control our own mobile robot base. We have recently launched our answer to this problem.

Introducing QBot Platform

The QBot Platform is designed for educators and researchers, who make difficult decisions all the time. While navigating institutional politics, catering to research initiatives, worrying about student retention, and encapsulating learning outcomes, the decisions also include ones related to hardware acquisition. Numerous factors such as funding, technical specifications, research applicability and diversity of learning styles must be considered while navigating a range of hardware solutions that fall on the DIY/academic/industrial spectrum. The tradeoff isn’t very linear. With the QBot Platform, you get industrial grade performance without the cost of losing DIY flexibility.

Education & Projects

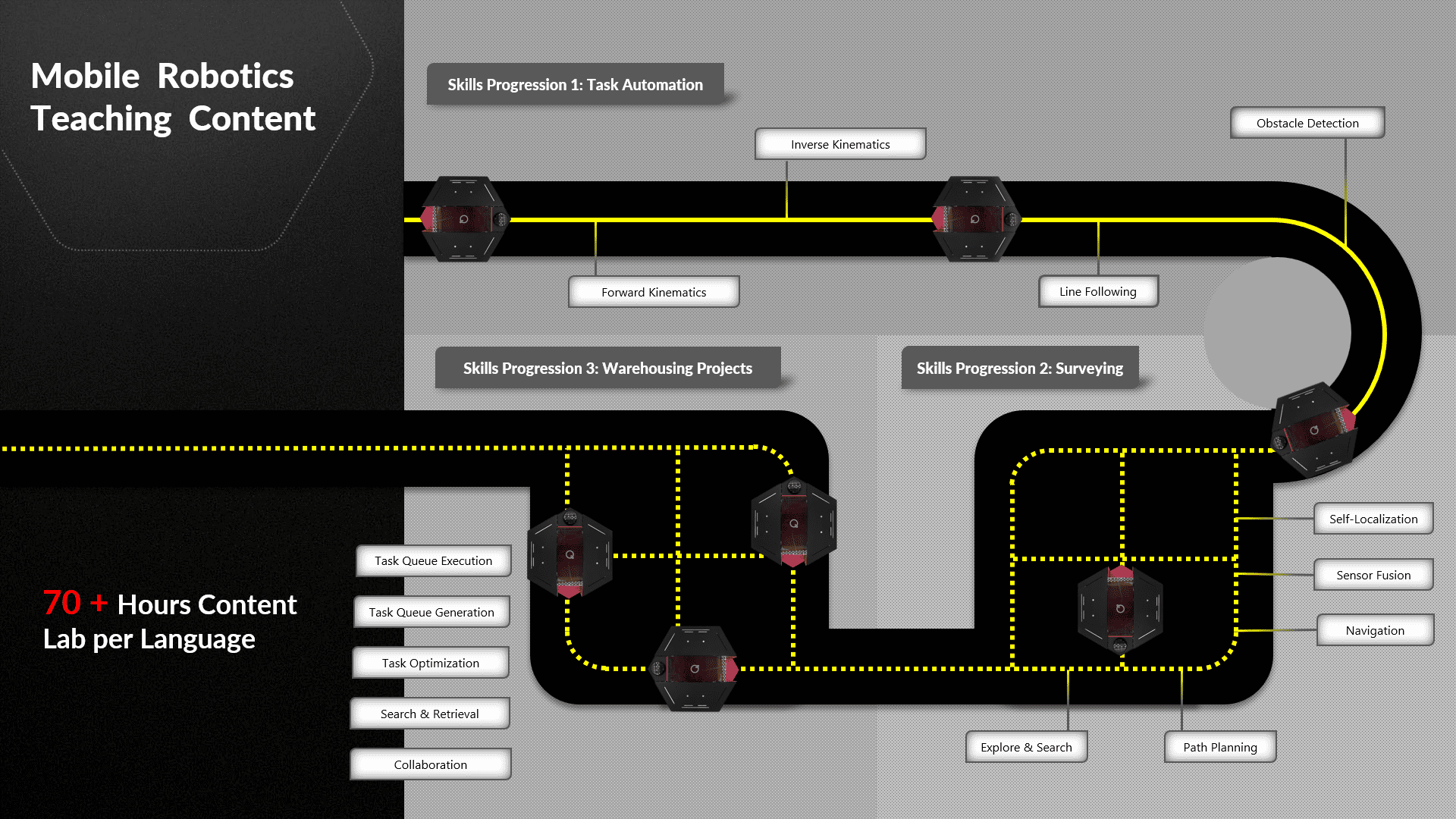

The teaching content for the QBot Platform as a part of the Mobile Robotics Lab is designed to cater to all years of undergraduate and graduate education. This involved the consideration of the life-cycle of motivation, foundation, expansion and exploration across a four-year robotics or mechatronics program, along with innovation expectations in graduate courses. The teaching content covers learning outcomes related to sensor and actuator interfacing, image and lidar processing, visual servoing, obstacle detection, localization, navigation, path planning, etc. all the way to multiagent collaboration.

Additionally, all this lab content is designed for both Python as well as MATLAB Simulink, so you can focus on your learning outcomes regardless of the programming language background and affinity of your students. Most labs can be run on both hardware and/or the digital twin. Lastly, the QBot Platform’s peripheral interface with USB 3.0, 40-pin GPIO, Wi-Fi and ethernet connectivity all make it ideal for PBL, whether in 2nd year integrated projects or 4th year capstone projects.

Research & Innovation

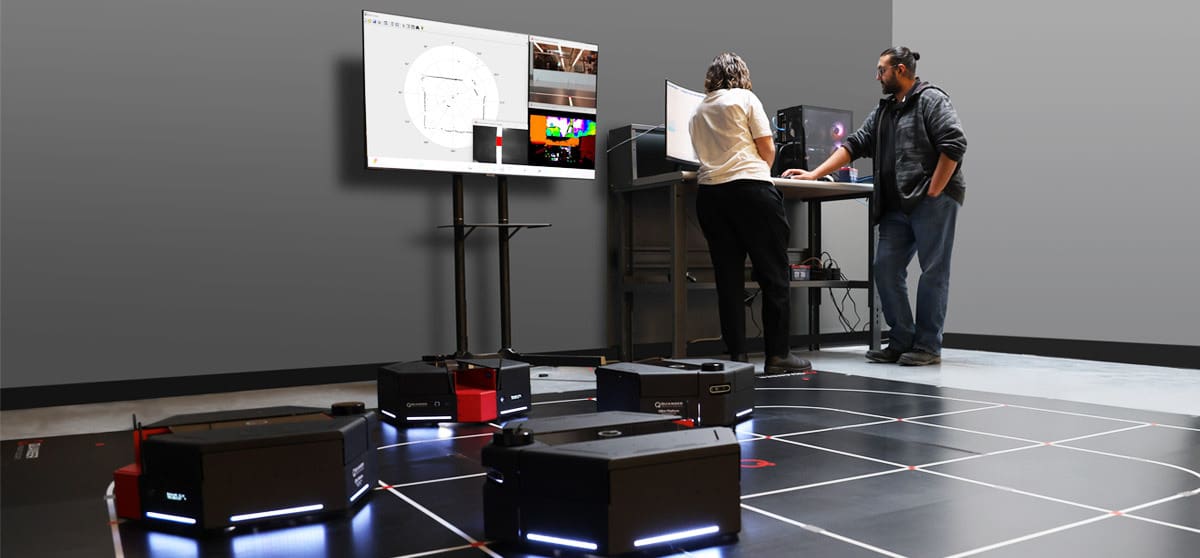

The QBot Platform is centered around a differential configuration as before, but has seen an upgrade to a 70Watt drivetrain in addition to 4 support castors allows it to carry up to 20 kg payloads uniformly in most indoor environments. The expanded sensor suite is packed with inertial, odometry, visual and ranging sensors all in one. Behind all this sensing and actuation is a computing node powered by the Jetson Orin Nano, one of the latest embedded targets from NVIDIA. With onboard Wi-Fi, you can now seamlessly and remotely control a swarm of these bots simultaneously for all sorts of research applications, such as multiagent collision-avoidance. If we can develop this demo in under an hour, imagine the possibilities in your innovation center!

Next steps

The QBot Platform is both useful for both traditional education outcomes and projects for Mobile Robotics across all undergraduate years, without sacrificing robustness or performance for graduate-level research. The Mobile Robotics Lab makes it even easier to integrate numerous QBot Platforms into a new setting. Along with multiple units, a preconfigured network infrastructure, virtualized digital twins as well as a reconfigurable environment, it transforms any empty room into an innovation hub for your students and academics alike. If you are interested in these solutions or have any questions about them, reach out to us or you can watch the webinar of Mobile Robotics Lab here!

Talk soon.