In the previous QCar blog post, we talked about the LIDAR-based SLAM techniques as one way of localizing a vehicle. Similarly, to localize a vehicle in a room, you could use optical localization hardware & software solutions such as Optitrack or Vicon. You can also use an image-based localization technique using April Tags.

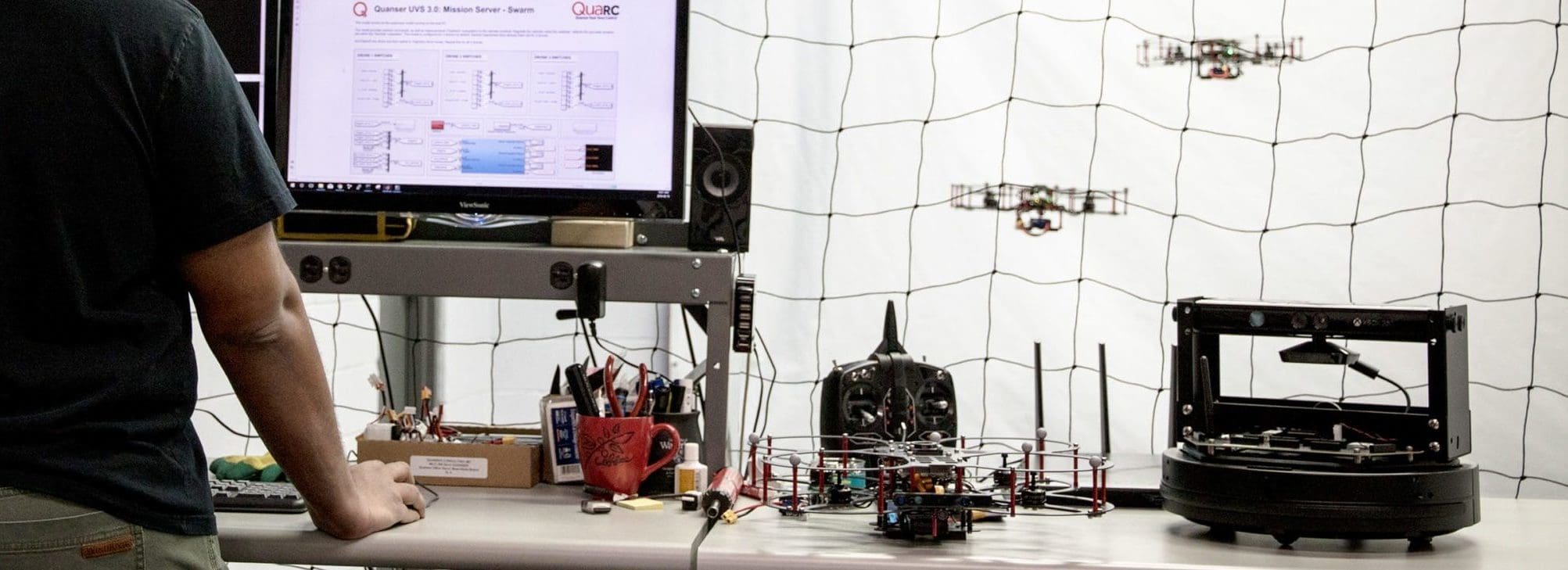

Test Configuration

To localize the QCar in my roughly 4×4 m office space, I set up ten 36h11 April Tags sets, each set consisting of two tags. I used the QCar’s Intel RealSense D435 camera as my video capture device and Matlab/Simulink with Quanser’s real-time software QUARC for code generation and deployment.

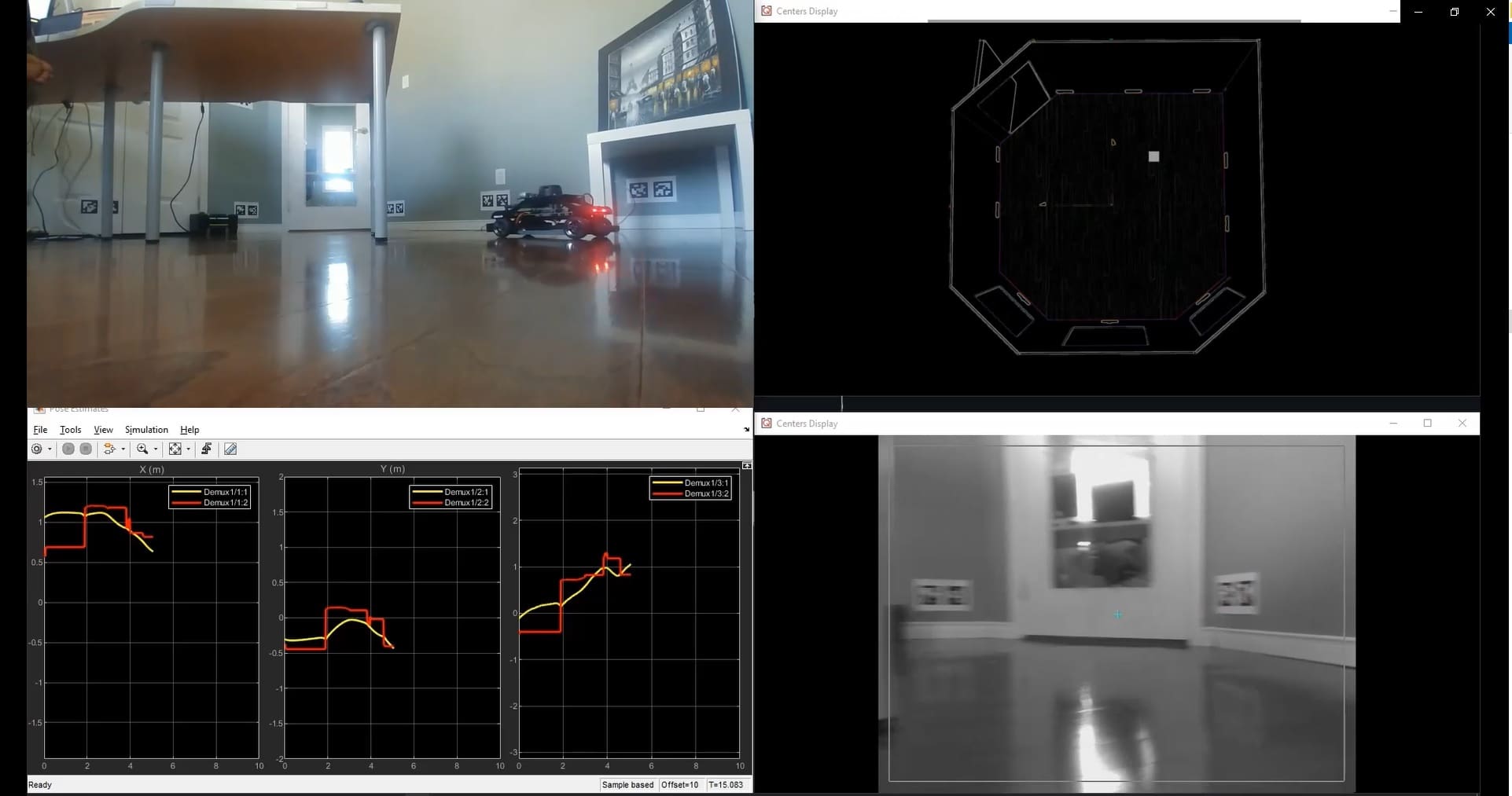

I’m trying to estimate the pose of the vehicle, that is, it’s XY planar position, as well as its heading, or yaw angle. I fed the steering, gyroscope and encoder-based car velocity data into a 3 DOF Bicycle Model to estimate the pose rate of the vehicle as well.

How It Works

To initialize the code, I set up two MATLAB scripts. The first one uses a series of calibration images taken by the camera to generate the camera intrinsic matrix. The second script allows me to specify the position and orientation of each of the April Tags in the world coordinates. This is important, as the April Tag code provides vehicle pose with respect to the April Tag set, and a conversion to the world coordinates frame must be accounted for separately.

The April Tag code consists of the Image Find Tags block, reporting the IDs of the tags found, and the location of the corners and the center of the tags as well. The other core piece of the code is the Image Get Camera Extrinsics block, which gives me the pose of the camera in the frame of reference of the April Tag set. Using one of my MATLAB scripts, I can convert this data to the world coordinates frame.

My Simulink model allows me to drive the vehicle using the motor commands from my keyboard. The hardware IO loop gives me the gyroscopic yaw rate, encoder-based vehicle speed, and steering commands, and pass it to my bicycle model to estimate the pose rate of the vehicle. The images captured by the Intel RealSense camera are passed to the April Tag code, which then estimates the pose of the vehicle. When no tags are found, I interpolate the vehicle pose using sensor fusion via pose rate integration and correct the data when tags are found. The code allows you to scale up smoothly in case you want to use more tag sets for a much larger room.

I hope you found this example helpful. It is one of many others provided with the Self-Driving Car Research Studio to help you do your research faster and easier.