QCar

Sensor-rich autonomous vehicle for self-driving applications

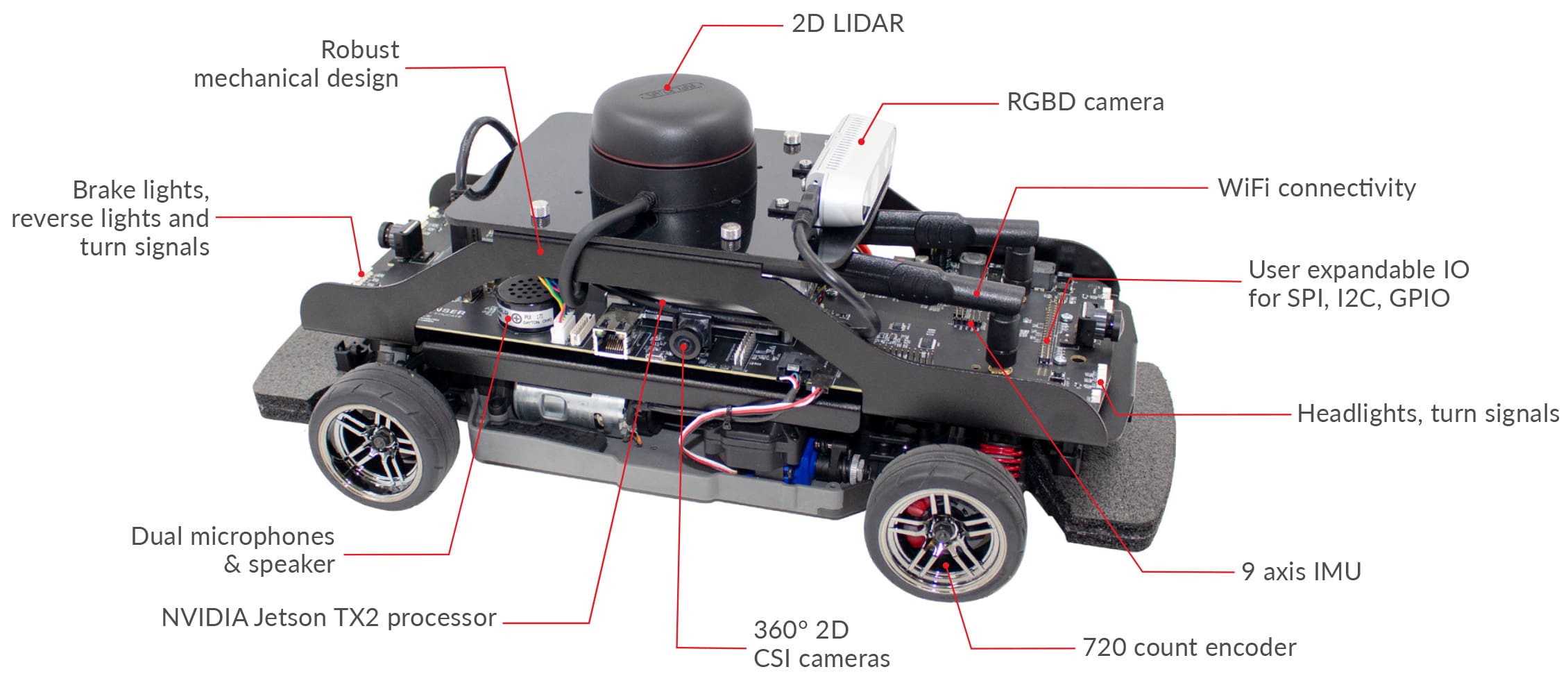

QCar, the feature vehicle of the Self-Driving Car Studio, is an open-architecture, scaled model vehicle designed for academic teaching and research. It is equipped with a wide range of sensors including LIDAR, 360-degree vision, depth sensor, IMU, encoders, as well as user-expandable IO. The vehicle is powered with an NVIDIA® Jetson™ TX2 supercomputer that gives you exceptional speed and power efficiency.

Product Details

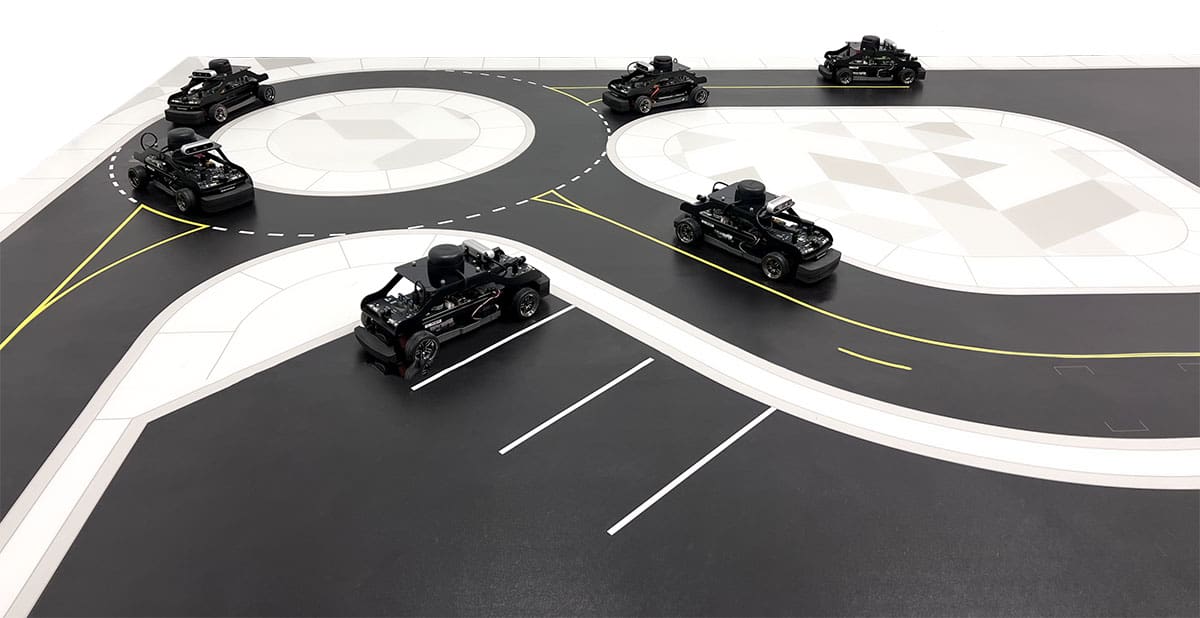

Working individually or in a fleet, QCar is the ideal vehicle to highlight self-driving concepts as skills activities, project-based learning experiences, research concept validation, and outreach showcases. Common topics include dataset generation, mapping, navigation, machine learning, artificial intelligence, and more.

Supported Software and APIs

| TensorFlow | TensorRT |

| Python™ | ROS 1 & 2 |

| MATLAB Simulink | C++ |

| CUDA® | cuDNN |

| OpenCV | Deep Stream SDK |

| VPI™ | VisionWorks® |

| GStreamer | Jetson Multimedia APIs |

| Docker containers with GPU support | Simulink® with Simulink Coder |

| Simulation and virtual training environments (Gazebo, Quanser Interactive Labs) |

| Dimensions | 39 x 21 x 21 cm |

| Weight (with batteries) | 2.7 kg |

| Power | 3S 11.1 V LiPo (3300 mAh) with XT60 connector |

| Operation time (approximate) | ~2 hours 11 m (stationary, with sensors feedback) |

| 30 m (driving, with sensor feedback) | |

| Onboard computer | NVIDIA® Jetson™ TX2 |

| CPU: 2 GHz quad-core ARM Cortex-A57 64-bit

+ 2 GHz Dual-Core NVIDIA Denver2 64-bit |

|

| GPU: 256 CUDA core NVIDIA Pascal™ GPU architecture, 1.3 TFLOPS (FP16) | |

| Memory: 8GB 128-bit LPDDR4 @ 1866 MHz, 59.7 GB/s | |

| LIDAR | LIDAR with 2k-8k resolution, 10-15Hz scan rate, 12m range |

| Cameras | Intel D435 RGBD Camera |

| 360° 2D CSI Cameras using 4x 160° FOV wide angle lenses, 21fps to 120fps | |

| Encoders | 720 count motor encoder pre-gearing with hardware digital tachometer |

| IMU | 9 axis IMU sensor (gyro, accelerometer, magnetometer) |

| Safety features | Hardware “safe” shutdown button |

| Auto-power off to protect batteries | |

| Expandable IO | 2x SPI |

| 4x I2C | |

| 40x GPIO (digital) | |

| 4x USB 3.0 ports | |

| 1x USB 2.0 OTG port | |

| 3x Serial | |

| 4x Additional encoders with hardware digital tachometer | |

| 4x Unipolar analog input, 12 bit, 3.3V | |

| 2x CAN Bus | |

| 8x PWM (shared with GPIO) | |

| Connectivity | WiFi 802.11a/b/g/n/ac 867Mbps with dual antennas |

| 1x Micro HDMI ports for external monitor support | |

| Additional QCar features | Headlights, brake lights, turn signals, and reverse lights (with intensity control) |

| Dual microphones | |

| Speaker | |

| LCD diagnostic monitoring, battery voltage, and custom text support |

Self-Driving Car Studio

The Self-Driving Car Studio is the most academically extensible solution that Quanser has created. As a turnkey research studio, the Self-Driving Car Studio equips researchers with the vehicles, accessories, infrastructure, devices, software, and resources needed to get from a concept to a published paper faster and consistently over generations of projects and students. This capacity is enabled first by the powerful and versatile 1/10 scale QCars that have an unprecedented level of instrumentation, reconfigurability, and interoperability. To compliment the cars, the studio also comes with a rich infrastructure component that includes:

- Programmable traffic lights

- Durable and multipurpose flooring

- A collection of scaled accessories such as signs and pylons

- A preconfigured PC with monitors and network infrastructure.

Bridging from research to teaching applications, the studio comes with a one-year subscription to the QLabs Virtual QCar that mirrors and enriches the studio experience with a digitally twinned cityscape and virtual QCar fleet. This environment allows researchers to expand the potential scenarios that can be built to validate algorithms and behaviours. By bridging and blending code between the physical and virtual, researchers can explore new avenues for complex intelligence and behavioural analysis. For teaching, the virtual environment offers each student the opportunity to explore and develop when and where they want before testing and validating on the physical cars. Finally, across both teaching and research, our rich collection of research examples and recommended curriculum for MATLAB Simulink, Python, and ROS enables adoption without the need for extensive development of the fundamentals. Overall, the Self-Driving Car Studio is a multi-disciplinary and multipurpose turnkey laboratory that can accelerate research, diversify teaching, and engage students from recruitment to graduation.