Self-Driving Car Studio

A multi-disciplinary turnkey laboratory that can accelerate research, diversify teaching, and engage students from recruitment to graduation.

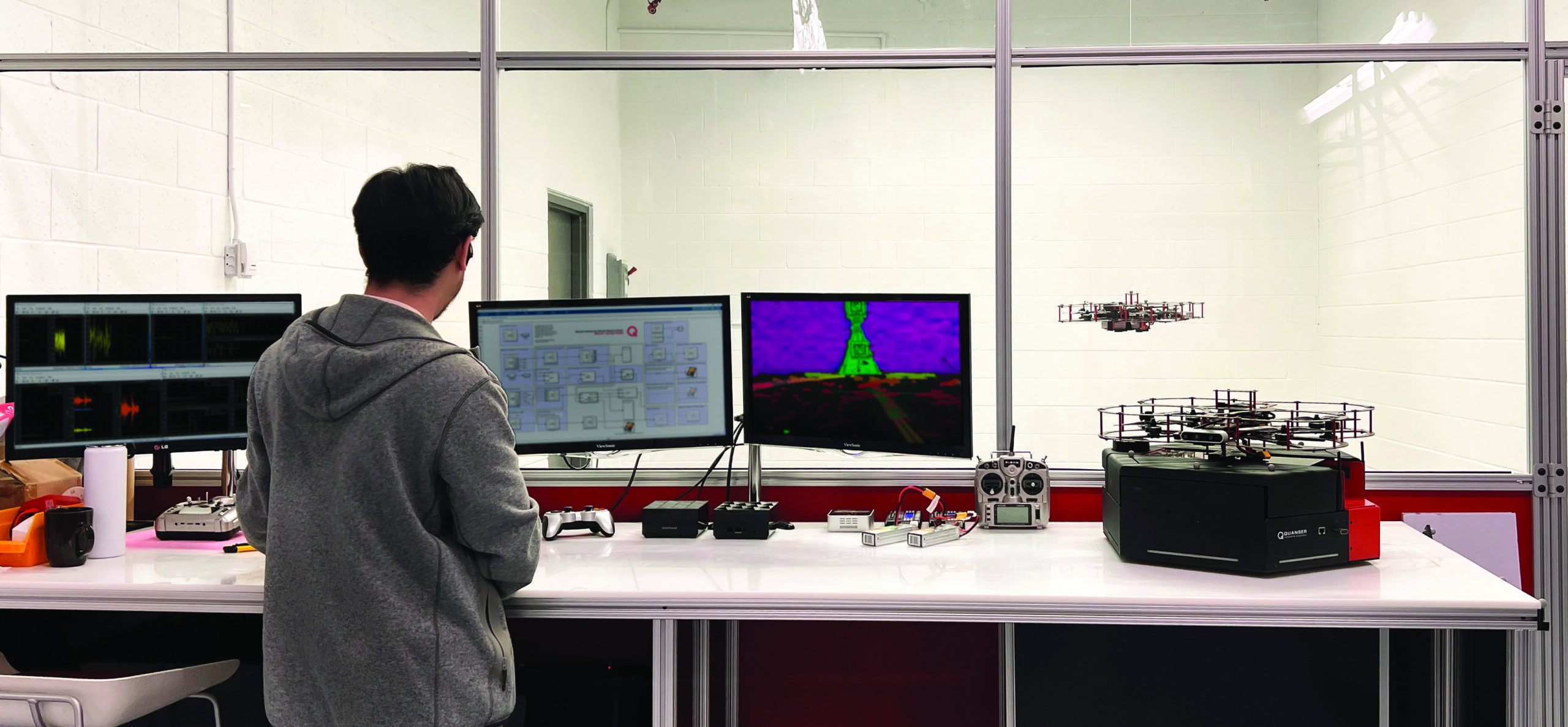

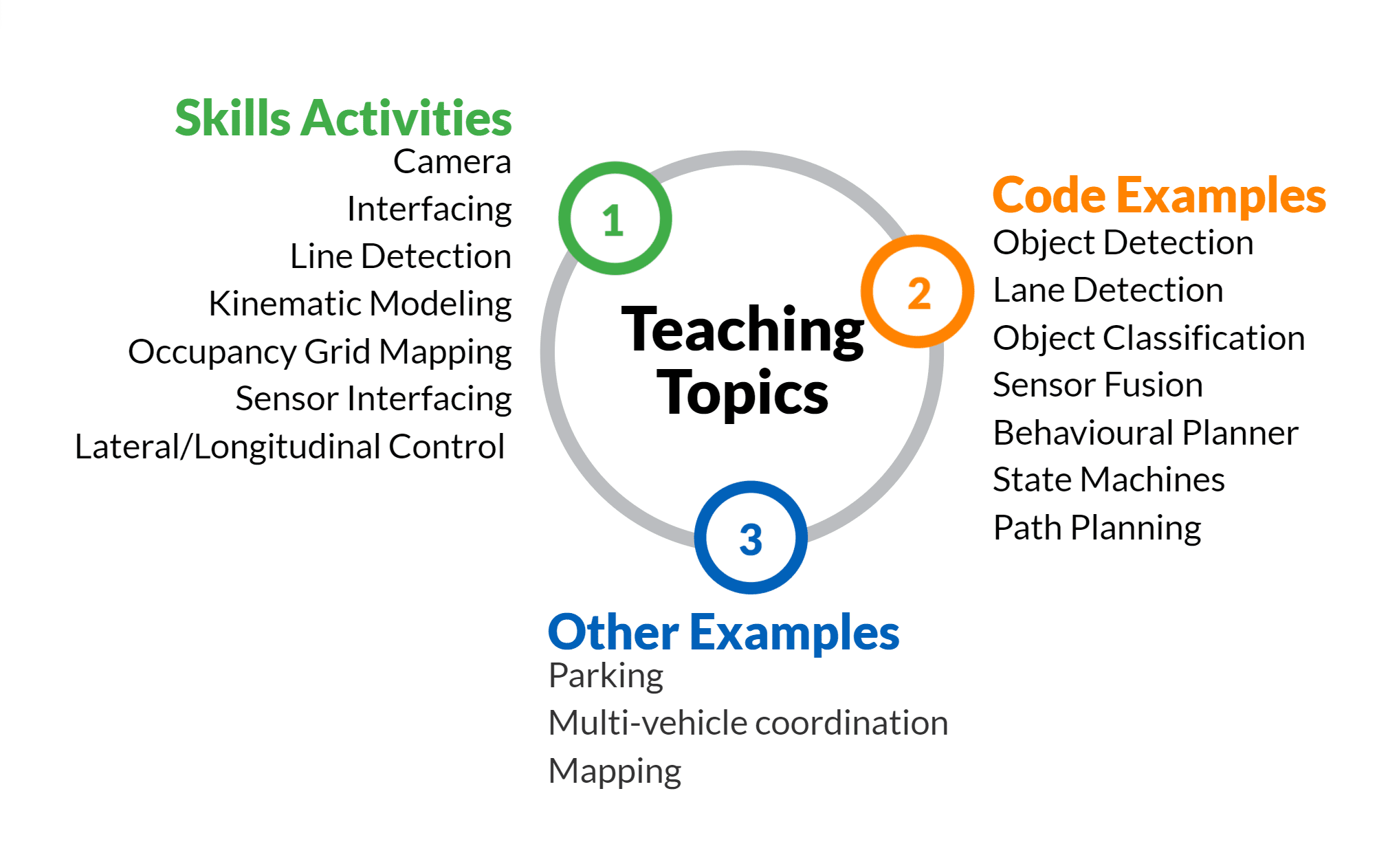

The Quanser Self-Driving Car Studio is the ideal platform to investigate a wide variety of research topics for teaching and academic research in an accessible and relevant way. Use it to jump-start your research or give students authentic hands-on experiences learning about the essentials of self-driving. The studio brings you the tools and components you need to test and validate dataset generation, mapping, navigation, machine learning, and other advanced self-driving concepts at home or on campus.

Check ACC Self-Driving Car Student Competition to get more information on how Quanser and QCar empowering the next generation of engineers.

Product Details

- Overview

- Features

- Specifications

- Workstation Configuration

- Supported Software & APIs

- Teaching Resources

- Outreach Resources

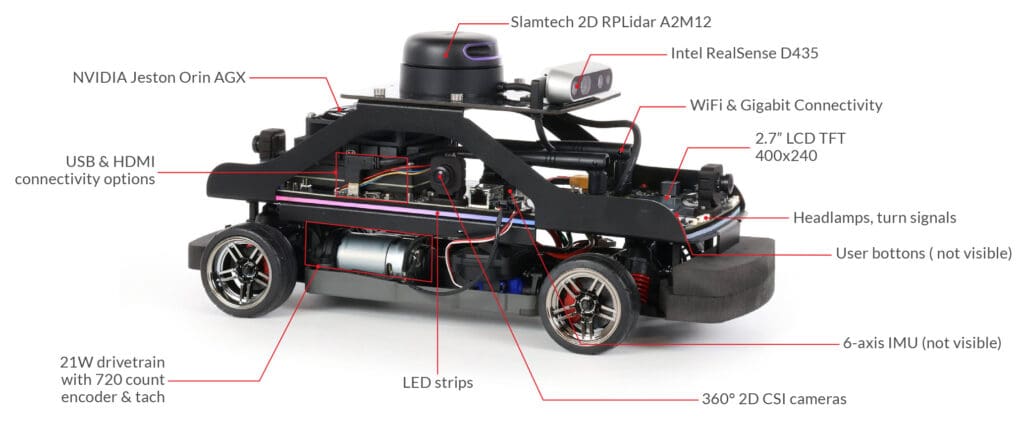

At the center of the Self-Driving Car Research Studio, the QCar 2, is an open-architecture scaled model vehicle, powered with NVIDIA® Jetson™ Orin AGX supercomputer, and equipped with a wide range of sensors, cameras, encoders, and user-expandable IO.

Relying on a set of software tools including Simulink®, Python™, TensorFlow, and ROS, the studio enables researchers to build high-level applications and reconfigure low-level processes that are supported by pre-built modules and libraries. Using these building blocks, you can explore topics such as machine learning and artificial intelligence training, augmented/mixed reality, smart transportation, multi-vehicle scenarios and traffic management, cooperative autonomy, navigation, mapping and control, and more.

| Dimensions | 39 x 21 x 21 cm |

| Weight (with batteries) | 2.7 kg |

| Power | 3S 11.1 V LiPo (3300 mAh) with XT60 connector |

| Operation time (approximate) | ~2 hours 11 m (stationary, with sensors feedback) |

| 30 m (driving, with sensor feedback) | |

| Onboard computer | NVIDIA® Jetson™ Orin AGX |

| CPU- 2.2 GHz 8-core ARM Cortex-A78 64-bit | |

| GPU- 930 MHz 1792-CUDA/56-TENSOR cores | |

| NVIDIA Ampere GPU architecture 200 TOPS | |

| Memory- 32GB 256-bit LPDDR5 @ 204.8 GB/s | |

| LIDAR | LIDAR with 16k points, 5-15 Hz scan rate, 0.2-12m rang |

| Cameras | Intel D435 RGBD Camera |

| 360° 2D CSI Cameras using 4x 160° FOV wide angle lenses, 21fps to 120fps | |

| Encoders | 720 count motor encoder pre-gearing with hardware digital tachometer |

| IMU | 6-axis IMU (gyroscope & accelerometer) |

| Safety features | Hardware “safe” shutdown button |

| Auto-power off to protect batteries | |

| Expandable IO *Subject to change | 2 user PWM output channels |

| Motor throttle control | |

| Steering control | |

| 2 unipolar user analog input channels, 12-bit, +3.3V | |

| motor current analog inputs | |

| 3 encoder channels (motor position plus up to two additional encoders) | |

| 11 reconfigurable digital I/O | |

| 3 user buttons | |

| 2 general purpose 3.3V high-speed serial ports* | |

| 1 high-speed 3.3V SPI port (up to 25 MHz)* | |

| 1 1.8V I2C port (up to 1 MHz)* | |

| 1 3.3V I2C port (up to 1 MHz)* | |

| 2 CAN bus interfaces (supporting CAN FD) | |

| 1 USB port | |

| 1 USB-C host port | |

| 1 USB-C DRP | |

| Connectivity | Wi-Fi 802.11a/b/g/n/ac 867 Mbps with dual antennas |

| 1x HDMI | |

| 1x 10/100/1000 BASE-T Ethernet | |

| Additional QCar Features | Headlamps, brake lights, turn signals and reverse lights |

| Individually programmable RGB LED strip (33x LEDs) | |

| Dual microphones | |

| Speaker | |

| 2.7″ LCD TFT 400×240 for diagnostic monitoring |

Vehicles

- QCar 2*

- QCar*

- (single vehicle or fleet)

Ground Control Station

- High-performance computer with RTX graphics card with Tensor AI cores

- Three monitors

- High-performance router

- Wireless gamepad

- QUARC Autonomous license

Studio Space

- Driving map featuring intersections, parking spaces, single & double lane roads and roundabouts

- Supporting infrastructure including traffic lights, signs and cones

| QUARC for Simulink® | |

| Quanser APIs | |

| TensorFlow | |

| Python™ 2.7 / 3 & ROS 2 | |

| CUDA® | |

| cuDNN | |

| TensorRT | |

| OpenCV | |

| Deep Stream SDK | |

| VisionWorks® | |

| VPI™ | |

| GStreamer | |

| Jetson Multimedia APIs | |

| Docker containers with GPU support | |

| Simulink® with Simulink Coder | |

| Simulation and virtual training environments (Gazebo and Quanser Interactive Labs ) | |

| Multi-language development supported with Quanser Stream APIs for inter-process communication | |

| Communication |

Group Citation: Self-driving

Explore more: All Research Paper